How to Build a Production-Ready AI Agent?

- Carlos Martinez

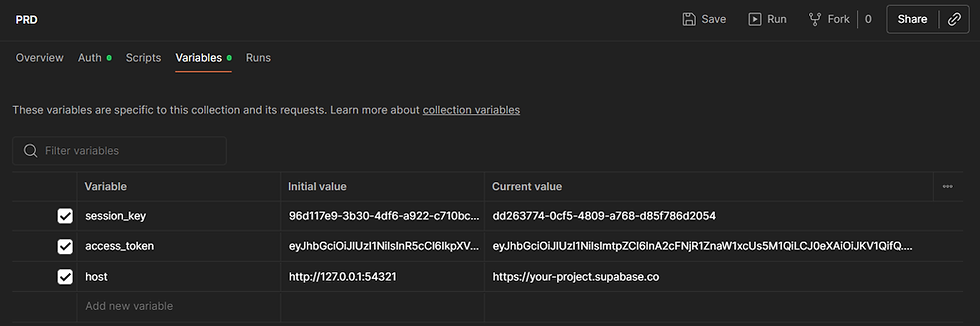

- Sep 5, 2025

- 41 min read

Most AI agents fail outside controlled tests. They lose context, misprocess files, or expose security gaps when used in real-world scenarios. The model itself is rarely the problem - it’s the surrounding architecture. Agents require structured workflows, clear responsibilities, reliable state management, and predictable system behavior to operate consistently.

In this guide, we’ll focus on a concrete use case: building an AI system that generates production-ready Product Requirements Documents (PRDs). The system collects requirements through conversation, processes uploaded files, and produces consistent, branded PDFs.

To make this work in production, we’ll implement a multi-agent architecture with OpenRouter, deploy on Supabase Edge Functions, enforce enterprise authentication and database-level security, and enable real-time document preview and storage. Every section includes practical code that can be deployed immediately.

System Overview

The system is designed to generate Product Requirements Documents (PRDs) through coordinated AI agents. Its main capabilities include:

Collecting requirements through conversational input.

Generating structured PDFs with consistent formatting.

Processing PDF files as input.

Enforcing authentication and permissions with Google OAuth and Row-Level Security (RLS).

Scaling automatically using serverless edge functions.

Deploying updates through GitHub Actions with minimal operational overhead.

The architecture focuses on simplicity and reliability: minimal frameworks, direct SQL queries, stateless functions, and database-enforced security.

Technology Stack

Backend and Infrastructure

Supabase: PostgreSQL database, authentication, storage, and edge functions.

Deno/TypeScript: Runtime for serverless edge functions.

Row-Level Security (RLS): Automatic enforcement of database-level permissions.

AI and LLMs

OpenRouter: Unified client for multiple LLM providers.

OpenAI GPT-4.1-mini: Primary model for document generation.

File Parser Plugin: Handles PDF processing via OpenRouter.

Multi-Agent Architecture: Specialized agents coordinated without heavy frameworks.

Core Libraries

jsPDF: Generates PDFs directly in JavaScript.

marked: Converts Markdown to HTML.

Standard Web APIs: fetch(), FormData, ArrayBuffer, atob(), btoa().

Integrations and Tools

Google OAuth: Enterprise authentication.

Supabase Storage: Secure file storage with signed URLs.

GitHub Actions: Automated CI/CD pipeline.

Minimalist Architecture Decisions

No LangChain: Uses a lightweight 60-line OpenRouter client.

No Puppeteer: PDFs generated with jsPDF, reducing latency.

No heavy frameworks: Only Deno and standard web APIs.

No ORM overhead: Direct SQL queries with type safety.

Stateless functions: RLS enforces permissions at the database level.

Production-Ready System Architecture

📦 AI-Powered PRD Generation System (Real Technologies)

├── 🧠 Multi-Agent AI Core (No LangChain)

│ ├── PRD Agent (requirements gathering + PDF processing)

│ ├── Markdown Agent (structured document generation)

│ └── Message Router (intelligent routing logic)

├── ⚡ Serverless Edge Functions (Deno runtime)

│ ├── ai-agents (multi-agent orchestrator)

│ ├── get-create-session (session management)

│ ├── chat (conversational interface with file upload)

│ ├── pdf-preview (real-time preview generation)

│ └── download (Supabase Storage + signed URLs)

├── 🗄️ PostgreSQL Database (Supabase + User Context)

│ ├── sessions (user session management)

│ ├── conversation_messages (chat history & context)

│ └── prd (generated document metadata)

├── 🔗 Production Integrations

│ ├── OpenRouter (custom 60-line client)

│ ├── Google OAuth (enterprise authentication)

│ ├── Supabase Storage (secure file management)

│ └── GitHub Actions (automated CI/CD pipeline)

└── 📱 RESTful APIs (Frontend-agnostic)

├── FormData support (file uploads)

├── JSON responses (structured data)

└── Standard HTTP methods (GET, POST)Performance, Security, and Developer Experience

The following are observed production benchmarks and characteristics of the deployed system:

Performance: Cold starts consistently under 30 ms; PDF generation completes in 1–2 s; global edge latency stays below 100 ms.

Cost: Pay-per-execution model with no idle servers and minimal framework overhead.

Security: Database-level permissions enforced through RLS, Google OAuth for authentication, and signed URLs for controlled file access.

Developer Experience: End-to-end TypeScript, Git push deployments, and built-in logs and metrics through Supabase.

Technical Decision Matrix

Component | Technology Used | Alternatives | Reason for Choice |

Backend Runtime | Supabase Edge Functions (Deno) | AWS Lambda, Vercel Functions | Cold start <30ms, native web APIs, TypeScript support |

Database | Supabase PostgreSQL + RLS | Firebase, MongoDB, PlanetScale | ACID compliance, automatic RLS, familiar SQL |

LLM Access | OpenRouter (60-line client) | LangChain, Direct OpenAI | Low overhead, multi-provider support |

PDF Generation | jsPDF | Puppeteer, PDFKit, wkhtmltopdf | Faster, no browser overhead |

Markdown Processing | marked | MDX, remark, markdown-it | Minimal bundle, fast parsing |

File Handling | Web APIs | Multer, Formidable | Standards-based, zero dependencies |

HTTP Client | fetch() | axios, node-fetch, ky | Built into Deno, Promise-based |

Authentication | Supabase Auth + Google OAuth | Auth0, Firebase Auth, Custom JWT | Direct RLS integration, enterprise-ready |

File Storage | Supabase Storage + signed URLs | AWS S3, Cloudinary | RLS support, automatic CDN, signed URLs |

Real Performance Benchmarks

Current system measurements:

Cold Start: 25-30ms (Deno edge functions).

Agent Response: 2-4 seconds (depending on LLM).

PDF Generation: 1-2 seconds (jsPDF vs 8-15s Puppeteer).

File Upload: Support up to 20MB (configured in code).

Global Latency: <100ms worldwide (Supabase edge CDN).

Production-configured limits:

Message length: 10,000 characters maximum.

File size: 20MB limit (MAX_FILE_SIZE constant).

PDF validation: Automatic (checks %PDF header).

Session timeout: 1 hour (signed URLs).

Code Quality & Simplicity

Project metrics:

Total functions: 5 edge functions + 6 shared utilities.

Dependencies: Minimal (jsPDF, marked, web APIs).

Custom code: ~95% of functionality.

Third-party complexity: Only OpenRouter client (60 lines).

Database queries: Direct SQL with type safety.

Step 1: Set up OpenRouter API Key

The PRD agent relies on LLMs to gather requirements, structure content, and generate PDFs. Using OpenRouter instead of calling OpenAI directly ensures production flexibility: you can switch models, avoid rate-limit issues, and keep billing unified. This makes the system more resilient and cost-efficient in long-term use

1.1 Why OpenRouter instead of direct OpenAI?

Key advantages in a production setup:

Multi-provider access: Connect to OpenAI, Anthropic, Google, Meta, and others via a single API.

Flexible switching: Change providers without modifying your code.

Cost optimization: Compare model prices in real time.

Rate limit pooling: Reduces throttling compared to direct API calls.

Unified billing: Single invoice for multiple providers.

1.2 Create an account and get an API key

1. Visit OpenRouter API Keys.

2. Sign up with GitHub or Google (recommended for enterprise use).

3. Click Create API Key.4. Configure the key:

Name: PRD-Generator-Production

Rate limit: 100 requests/minute (adjustable later)

5. Save the API key securely. Format: sk-or-v1-xxxxxxxxxxxxx.

Step 2: Create Supabase Project

The PRD agent needs a backend that can manage sessions, enforce authentication, and store generated documents securely. Supabase provides a PostgreSQL database, authentication, storage, and serverless edge functions in a single stack. This allows the system to remain simple and production-ready without adding multiple services.

2.1 Create the project

1. Go to Supabase and create an account.

2. Click "Start your project"

3. Select your organization

4. Project configuration:

Name: prd-backend

Database Password: generate a secure password and save it.

Region: Select the closest to your users.

5. Click Create new project.

2.2 Configure Git Integration

1. Go to Project Settings > Integrations.

2. In the Git Integration section:

Connect your GitHub repository.

Branch: main.

Disable Auto-deploy when pushing to linked branch.

Disable Automatic branching.

Note the Project Reference ID from Project Settings > General and the Anon Key from Project Settings > API Keys

Why disable auto-deploy? We'll use GitHub Actions for controlled deployments.

Step 3: Configure Google Authentication

The system is designed for enterprise use where access must be restricted to authenticated users. Using Google OAuth ensures secure sign-in with minimal code while integrating directly with Supabase’s Row-Level Security (RLS).

3.1 Configure in Supabase

1. Go to Authentication > Sign In / Providers.

2. Enable Google as a sign-in provider.

3. KEEP THIS FORM OPEN (we'll come back here)

4. Copy the Callback URL that appears (something like: https://[project].supabase.co/auth/v1/callback)

3.2 Configure in Google Cloud Console

1. Go to Google Cloud Console.

2. Create a new project or select an existing one.

3. Go to APIs & Services > Credentials.

4. Click "+ CREATE CREDENTIALS" > "OAuth client ID".

5. If it's your first time, configure OAuth consent screen first:

User Type: External

App name: PRD Generator

User support email: your email

Developer contact: your email

6. Return to Credentials > + CREATE CREDENTIALS > OAuth client ID.

Application type: Web application.

Name: PRD Generator Web Client

Authorized JavaScript origins: http://localhost:3000 (for local development),

Authorized redirect URIs: Paste the Callback URL copied from Supabase

3.3 Complete Supabase configuration

1. Return to the open form in Supabase.

2. Client ID: [Paste the Client ID from Google]

3. Client Secret: [Paste the Client Secret from Google]

4. Click Save.

Step 4: Configure Storage

The PRD system generates PDFs and requires secure storage. Some files (like PDFs) must be private, while others (like logos) need to be public. Supabase Storage supports both and integrates directly with RLS for controlled access.

4.1 Create Private Bucket for PDFs

1. Go to Storage in Supabase.

2. Click "New bucket".

3. Configuration:

Name: prd-production

Public bucket: DISABLED

4. Click "Create bucket".

4.2 Create Public Bucket for Assets

1. Click "New bucket" again.

2. Configuration:

Name: public-content

public-content: ENABLED

3. Click "Create bucket".

4.3 Upload Logo

1. Enter the public-content bucket.

2. Click "Upload file".

3. Upload your logo.png file.

4. After upload, click the file and copy the public URL.

5. Save this as LOGO_URL for later use.

Step 5: Set up GitHub Repository and Local Development

Source control and a reproducible local environment are critical for production systems. The GitHub repository ensures every change is tracked, versioned, and tied to CI/CD pipelines. Supabase CLI provides the local structure to define migrations, edge functions, and configuration files, so the system behaves the same locally as it will in production.

5.1 Install Supabase CLI and set up repository

# Clone the template or create new repository

git clone https://github.com/your-username/prd-mobile-backend.git

cd prd-mobile-backend

# Install Supabase CLI (required for development commands)

npm install -g supabase

# Verify installation

supabase --version

# Initialize Supabase project structure

supabase initNote: Supabase CLI is needed for development (creating files, project structure).

For deployment, all functions run via GitHub Actions - no local CLI required.

5.2 Project structure after initialization

After running supabase init, you'll have:

prd-mobile-backend/

├── supabase/

├── config.toml # Supabase configuration

├── migrations/ # SQL files that execute automatically

└── functions/ # Edge functions deployed via workflowStep 6: Create Database Migrations (CLI Required)

Your database schema defines the system’s backbone. Without migrations, schema changes risk being inconsistent across environments. Using Supabase migrations ensures the same schema applies automatically in local development, staging, and production, with full version control in GitHub. For this system, we need three core tables:

sessions: Tracks each authenticated user’s active conversation.

conversation_messages: Stores chat history for context and auditing.

prd: Stores generated PRD document metadata (including file paths).

Together, these provide reliable state management for the agent system.

6.1 Create migration for main tables

# Create migration file

supabase migration new create_core_tablesEdit the generated file supabase/migrations/[timestamp]_create_core_tables.sql:

-- Create table session

create table "public"."session" (

"id" uuid not null default gen_random_uuid(),

"user_id" uuid,

"created_at" timestamp with time zone default now(),

"updated_at" timestamp with time zone default now(),

constraint "session_pkey" primary key ("id"),

constraint "session_user_id_fkey" foreign key ("user_id") references "auth"."users"("id") on delete cascade

);

-- Create table prd

create table "public"."prd" (

"id" uuid not null default gen_random_uuid(),

"session_id" uuid not null,

"prd_pdf_path" text not null,

"created_at" timestamp with time zone default now(),

"updated_at" timestamp with time zone default now(),

constraint "prd_pkey" primary key ("id"),

constraint "prd_prd_pdf_path_key" unique ("prd_pdf_path"),

constraint "prd_session_id_fkey" foreign key ("session_id") references "session"("id") on delete cascade

);

-- Indices to improve performance

CREATE INDEX idx_session_user_id ON public.session USING btree (user_id);

CREATE INDEX idx_prd_session_id ON public.prd USING btree (session_id);

-- Simple grants without RLS - keep it simple

grant all on table "public"."session" to "authenticated";

grant all on table "public"."prd" to "authenticated";

grant all on table "public"."session" to "service_role";

grant all on table "public"."prd" to "service_role";6.2 Create migration for conversation messages

# Create migration file

supabase migration new create_conversation_messagesEdit the generated file supabase/migrations/[timestamp]_create_conversation_messages.sql:

-- Create conversation_messages table for storing chat history

-- This table stores the conversation history between users and AI agents

create table "public"."conversation_messages" (

"id" uuid not null default gen_random_uuid(),

"session_id" uuid not null,

"role" text not null,

"content" text not null,

"agent_type" text,

"created_at" timestamp with time zone default now(),

"updated_at" timestamp with time zone default now(),

constraint "conversation_messages_pkey" primary key ("id"),

constraint "conversation_messages_session_id_fkey" foreign key ("session_id") references "public"."session"("id") on delete cascade,

constraint "conversation_messages_role_check" check (role in ('user', 'assistant', 'system'))

);

-- Indices to improve performance

CREATE INDEX idx_conversation_messages_session_id ON "public"."conversation_messages" USING btree (session_id);

CREATE INDEX idx_conversation_messages_created_at ON "public"."conversation_messages" USING btree (session_id, created_at);

-- ========================================

-- GRANTS AND PERMISSIONS

-- ========================================

-- Simple grants without RLS - keep it simple

GRANT ALL ON "public"."conversation_messages" TO authenticated;

GRANT ALL ON "public"."conversation_messages" TO service_role;6.3 Migrations execute automatically via GitHub Actions

Once you push these files to GitHub, the migrations will execute automatically via GitHub Actions. We will see later how this will be done.

Data architecture recap:

sessions: One session per agent conversation.

conversation_messages: Complete chat history (user/assistant).

prd: Generated document metadata (filename, session_id).

User context: Automatic via Supabase Auth integration.

Step 7: Create Edge Functions (CLI Required)

Edge functions are the execution layer of the system. Each function has a single responsibility (agent orchestration, session management, chat, preview, download) and runs close to the user with <100ms global latency. This separation ensures scalability, security, and easier debugging. Shared utilities (auth, database, response handling) prevent duplicated code and enforce consistent patterns across all functions.

7.1 Create all functions

# Create the 5 main functions

supabase functions new ai-agents

supabase functions new get-create-session

supabase functions new chat

supabase functions new pdf-preview

supabase functions new download7.2 Create shared utilities folder

# Create shared utilities folder

mkdir supabase/functions/_shared7.2 Implement shared utilities

Let's create the base files that will be reused by all functions:

supabase/functions/_shared/types.ts

export interface AIServiceResponse {

output: string;

is_prd_complete?: boolean;

sections?: any[];

}

export interface PdfPreviewResponse {

markdown: string;

pdf_base64: string;

sections: any[];

}

export interface SessionData {

id: string;

user_id: string;

created_at: string;

updated_at: string;

}supabase/functions/_shared/cors.ts

export const corsHeaders = {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': 'authorization, x-client-info, apikey, content-type',

'Access-Control-Allow-Methods': 'POST, GET, OPTIONS, PUT, DELETE',

}

export const handleCors = (request: Request) => {

if (request.method === 'OPTIONS') {

return new Response('ok', { headers: corsHeaders })

}

}supabase/functions/_shared/response.ts

import { corsHeaders } from './cors.ts'

export const createResponse = (data: any, status = 200) => {

return new Response(

JSON.stringify(data),

{

status,

headers: {

...corsHeaders,

'Content-Type': 'application/json',

},

}

)

}

export const createErrorResponse = (message: string, status = 400) => {

return createResponse({ error: message }, status)

}supabase/functions/_shared/database.ts

import { createClient } from 'https://esm.sh/@supabase/supabase-js@2'

export const createSupabaseClient = () => {

return createClient(

Deno.env.get('SUPABASE_URL') ?? '',

Deno.env.get('SUPABASE_ANON_KEY') ?? '',

{

auth: {

autoRefreshToken: false,

persistSession: false,

detectSessionInUrl: false

},

db: {

schema: 'public'

}

}

)

}

export const createAdminClient = () => {

return createClient(

Deno.env.get('SUPABASE_URL') ?? '',

Deno.env.get('SUPABASE_SERVICE_ROLE_KEY') ?? '',

{

auth: {

autoRefreshToken: false,

persistSession: false,

detectSessionInUrl: false

},

db: {

schema: 'public'

}

}

)

}

// Create a client with user context for RLS operations

export const createUserClient = (accessToken: string) => {

const client = createClient(

Deno.env.get('SUPABASE_URL') ?? '',

Deno.env.get('SUPABASE_ANON_KEY') ?? '',

{

auth: {

autoRefreshToken: false,

persistSession: false,

detectSessionInUrl: false

},

db: {

schema: 'public'

},

global: {

headers: {

Authorization: `Bearer ${accessToken}`

}

}

}

)

return client

}supabase/functions/_shared/auth.ts

import { createClient } from 'https://esm.sh/@supabase/supabase-js@2'

import { createErrorResponse } from './response.ts'

export const getUser = async (request: Request) => {

const token = request.headers.get('Authorization')?.replace('Bearer ', '')

if (!token) {

throw new Error('No authorization token provided')

}

const supabase = createClient(

Deno.env.get('SUPABASE_URL') ?? '',

Deno.env.get('SUPABASE_ANON_KEY') ?? '',

{

auth: { autoRefreshToken: false, persistSession: false }

}

)

const { data: { user }, error } = await supabase.auth.getUser(token)

if (error || !user) {

throw new Error('Invalid token')

}

return user

}

export const requireAuth = async (request: Request) => {

try {

return await getUser(request)

} catch (error) {

throw createErrorResponse('Unauthorized', 401)

}

}Step 8: Implement AI Agent System (No Heavy Frameworks)

8.1 Understanding the minimalist agent system

Our system uses 2 specialized agents with simple architecture:

1. PRD Agent (openai/gpt-4.1-mini):

Gathers information conversationally.

Processes PDF files via OpenRouter file parser.

Determines when information is complete.

Responds in structured JSON format.

2. Markdown Agent (openai/gpt-4.1-mini):

Converts conversations into structured PRD.

Generates professional-grade markdown.

Organizes sections automatically.

Optimized for PDF conversion.

3. Message Router (Logic-based):

Detects .generate_markdown command.

Routing based on simple patterns.

No AI overhead for routing.

8.2 OpenRouter Integration (Custom 60-Line Client)

Why custom client vs LangChain?

Custom 60-line client: Direct calls, no abstraction overhead.

Benefits over LangChain: Faster, simpler, easier error handling, flexible model selection.

supabase/functions/ai-agents/utils/openrouter.ts

export interface OpenRouterMessage {

role: 'system' | 'user' | 'assistant';

content: string | Array<{

type: 'text' | 'file';

text?: string;

file?: {

filename: string;

file_data: string; // data:application/pdf;base64,... format

};

}>;

}

export interface OpenRouterResponse {

choices: Array<{

message: {

content: string;

};

}>;

usage?: {

prompt_tokens: number;

completion_tokens: number;

total_tokens: number;

};

}

export const MODELS = {

prd_agent: Deno.env.get('PRD_AGENT_MODEL') || 'openai/gpt-4.1-mini',

markdown_agent: Deno.env.get('MARKDOWN_AGENT_MODEL') || 'openai/gpt-4.1-mini'

} as const;

export class OpenRouterClient {

private apiKey: string;

private baseUrl = 'https://openrouter.ai/api/v1';

constructor() {

const apiKey = Deno.env.get('OPENROUTER_API_KEY');

if (!apiKey) {

throw new Error('OPENROUTER_API_KEY environment variable is required');

}

this.apiKey = apiKey;

}

async chat(

model: keyof typeof MODELS,

messages: OpenRouterMessage[],

temperature = 0.3,

maxTokens = 2000,

plugins?: Array<any>

): Promise<string> {

try {

const response = await fetch(`${this.baseUrl}/chat/completions`, {

method: 'POST',

headers: {

'Authorization': `Bearer ${this.apiKey}`,

'Content-Type': 'application/json'

},

body: JSON.stringify({

model: MODELS[model],

messages,

temperature,

max_tokens: maxTokens,

...(plugins && { plugins })

})

});

if (!response.ok) {

const errorText = await response.text();

throw new Error(`OpenRouter API error: ${response.status} - ${errorText}`);

}

const data: OpenRouterResponse = await response.json();

if (!data.choices || data.choices.length === 0) {

throw new Error('No response from OpenRouter API');

}

return data.choices[0].message.content;

} catch (error) {

console.error('OpenRouter API error:', error);

throw new Error(`Failed to get response from AI model: ${error instanceof Error ? error.message : 'Unknown error'}`);

}

}

}

// Singleton instance

export const openRouter = new OpenRouterClient();8.3 Implement specialized prompts

supabase/functions/ai-agents/utils/prompts.ts

export const PRD_AGENT_PROMPT = `# Leanware Requirements Agent

You are the Leanware Requirements Agent, an AI-powered conversational tool designed to collect detailed software development requirements from potential clients and generate comprehensive PRDs.

## Primary Goal

Gather comprehensive project requirements through natural conversation, asking targeted questions to uncover all necessary information for creating detailed PRDs.

## Output Format

You MUST respond only in this JSON format:

{

"output": "Your natural conversational response here",

"is_prd_complete": false

}

Set "is_prd_complete" to true only when you have gathered sufficient information across all requirement areas and the PRD is comprehensive and complete.

## Core Requirements to Gather

### 1. Project Overview & Objectives

- Primary purpose and goals of the software

- Target users and their needs

- Problems the software will solve

- Application type (web, mobile, desktop, API)

### 2. Project Approach

- Development methodology preferences

- Team collaboration preferences

- Project management approach

### 3. Technical Requirements

- Frontend technologies and platforms

- Backend requirements (databases, APIs, integrations)

- Authentication and authorization needs

- Security and compliance requirements (GDPR, HIPAA, etc.)

- Performance and scalability expectations

- Preferred tech stack (if any)

### 4. Scope & Functionality

- Core features and user stories

- User roles and permissions

- Admin functionality requirements

- Integration needs with existing systems

- Edge cases and error handling

### 5. Timeline & Constraints

- Expected project duration and milestones

- Priority ranking: Quality vs Timeline vs Scope

- Phased approach preferences

- Resource constraints and considerations

### 6. Design & UX

- Existing branding or style guides

- Design system needs

- UI/UX requirements and inspiration

## Conversation Guidelines

1. **Be conversational and warm** - Use a friendly, professional tone

2. **Ask focused questions** - One area at a time, avoid overwhelming the user

3. **Build on previous answers** - Don't repeat questions already answered

4. **Probe for specifics** - Ask follow-up questions for vague responses

5. **Confirm understanding** - Summarize key points before moving to new areas

6. **Guide the conversation** - Always include clear next steps

## Key Questions to Ask

**Initial**: "What do you want to build? Can you give me a brief description of your project?"

**Project Approach**: "How would you prefer to work with our team?

1. Collaborative development with your existing team

2. Full project delivery by our team

3. Not sure yet (let's discuss based on your needs)"

**Features**: "What are the main features or modules of your application?"

- Follow up with user flows, admin needs, settings, notifications

- Ask about edge cases and integration points for each feature

**Technical**: "Do you have preferred technologies, or should we recommend based on your needs?"

- Authentication methods (Google, email/password, SSO)

- External API integrations

- Security and compliance requirements

**Timeline**: "What's your desired timeline and key milestones?"

## Modification Mode

When users want to modify existing requirements:

1. Acknowledge the specific change request

2. Gather the updated information

3. Validate the changes are complete

4. Set \`is_prd_complete\` to \`true\` when modifications are finished

## Completion

When requirements are complete, use responses like:

- {"output": "Perfect! I have all the information needed for your project requirements. Your comprehensive PRD is now complete and ready for review.", "is_prd_complete": true}

**IMPORTANT**: Setting \`is_prd_complete\` to \`true\` indicates that the PRD gathering process is finished.

## Example Responses

Valid:

- {"output": "Welcome to Leanware! I'm here to help gather your project requirements. What would you like to build?", "is_prd_complete": false}

- {"output": "Thanks for those details! To better understand your needs, what are the main features users will interact with?", "is_prd_complete": false}

- {"output": "Excellent! Your requirements are complete. Your PRD is now ready.", "is_prd_complete": true}

Invalid:

- \`\`\`json {"output": "Great!", "is_prd_complete": false}\`\`\` (wrong format)

- Plain text responses without JSON structure`;

export const MARKDOWN_AGENT_PROMPT = `You are the Markdown Agent. Generate a comprehensive PRD in markdown format from the gathered requirements data.

## Core Rules

1. **Data Processing**: Extract and interpret all available requirement information

2. **Content Quality**: Only include sections with sufficient information - omit incomplete sections

3. **Format**: Generate clean markdown without code blocks or fences

4. **Structure**: Organize content according to the specified sections below

## Content Guidelines

1. **Follow the Contents structure** - Use only the sections specified below

2. **Skip incomplete sections** - If insufficient information exists, omit that section

3. **No placeholder content** - Avoid "TBD" or "To be determined" entries

4. **Focus on technical specs** - Exclude pricing and commercial information

# Table Generation Guidelines

When generating tables, use proper markdown syntax for correct PDF generation:

1. **Always use pipe separators (|)** for all table columns

2. **Include header separator row** with dashes (|---|---|---|)

3. **Keep column content concise** for better PDF layout

4. **Use consistent spacing** between pipes and content

## Feature Specification Tables

For organizing features and requirements, I will create tables like:

| Feature/User Story | Priority | Complexity | Dependencies | Notes |

|--------------------|----------|------------|--------------|-------|

| User Authentication | High | Medium | OAuth provider setup | Including social login |

| Dashboard Development | High | High | Data API, Charts library | Multiple data visualizations |

| API Integration | Medium | Medium | Third-party credentials | External service connections |

## Technical Requirements Tables

For technical specifications, I will create tables like:

| Component | Technology | Requirements | Notes |

|-----------|------------|--------------|-------|

| Frontend | React/Vue/Angular | Modern browser support | Responsive design |

| Backend | Node.js/Python/Java | API development | RESTful or GraphQL |

| Database | PostgreSQL/MongoDB | Data persistence | Scalable architecture |

## Timeline & Milestones Tables

For project planning, I will create tables like:

| Phase | Duration | Key Deliverables | Success Criteria |

|-------|----------|------------------|------------------|

| Phase 1 | 2-4 weeks | Core features | MVP functionality |

| Phase 2 | 4-6 weeks | Advanced features | Full feature set |

| Phase 3 | 2-3 weeks | Testing & deployment | Production ready |

## User Stories Tables

For user requirements, I will create tables like:

| As a... | I want to... | So that... | Acceptance Criteria |

|---------|--------------|------------|-------------------|

| End User | Login securely | Access my account | Email/password authentication |

| Admin | Manage users | Control access | CRUD operations for users |

## Complexity Reference Tables

When applicable, I will include complexity estimation references:

| Complexity | Description | Characteristics |

|------------|-------------|----------------|

| Very Low | Simple UI components, minor changes | Straightforward implementation |

| Low | Simple functionality, easy integrations | Standard patterns |

| Medium | Complete features, medium integrations | Some custom logic |

| High | Complex functionalities, multiple integrations | Advanced requirements |

| Very High | Complex systems, advanced algorithms | Cutting-edge technology |

## PRD Structure

Generate the following sections when sufficient information is available:

# Project Overview

## Name

## Purpose

## Application Type

(Include the type of solution: desktop, mobile, web, API, etc.)

## Scope

## Target Audience / Users

### Profiles

### Needs

### Pain Points

## Business Objectives

### Goals

### KPIs

### Success Metrics

## Core Features & Requirements

(Here at least 3-4 sentences per category, if there is more detail to include extend up to 6. Include the admin module en feature categories if it applies.)

### Feature categories

(Organize features logically, use Markdown subheadings (4th, 5th or 6th level) to differentiate groups of features and list each feature under the correct category—no extra line breaks between items)

### User Flows

(Describe each user journey step-by-step, in paragraphs or numbered list (1,2,3,..))

### Edge Cases

(Identify possible edge cases and how they should be handled.)

## Technical Requirements

(Here at least 3-4 sentences per category. Only lower when there is not to much info)

### Preferred Tech Stack

### Integrations

### Security

### Authentication

### Compliance

### Data management

(this includes logging and monitoring, load targets, concurrency)

## Testing

## Design & UX

## Timeline & Milestones

(Include project timeline, key milestones, and delivery phases)

## Project Approach

(Include recommended development methodology and team collaboration approach)

## Implementation Considerations

(Include technical considerations, risks, and assumptions for the project implementation)

## Additional information

You must respond with ONLY a JSON object in this format:

{

"output": "The complete markdown content here with proper table formatting",

"sections": [

{

"title": "Section title",

"sub_title": "Subsection title if applicable",

"content": "Section content"

}

]

}

Ensure all markdown tables use proper pipe (|) syntax with header separators for correct PDF rendering.`;8.4 Simple Message Router (No AI)

supabase/functions/ai-agents/utils/message-router.ts

// Simple message router - decides which agent to use

export interface RoutingDecision {

target_agent: 'prd_agent' | 'markdown_agent';

reasoning: string;

}

/**

* Simple message routing logic - no AI needed for this basic validation

*/

export function routeMessage(userMessage: string): RoutingDecision {

if (userMessage.trim() === '.generate_markdown') {

return {

target_agent: 'markdown_agent',

reasoning: 'User requested markdown generation with exact command'

};

}

return {

target_agent: 'prd_agent',

reasoning: 'Default routing to PRD agent for requirements gathering'

};

}8.5 Implement specialized agents

PRD Agent - supabase/functions/ai-agents/agents/prd-agent.ts

// PRD Agent - handles requirements gathering with text and PDF support

import { openRouter, OpenRouterMessage } from '../utils/openrouter.ts';

import { PRD_AGENT_PROMPT } from '../utils/prompts.ts';

export interface PRDAgentResponse {

output: string;

is_prd_complete: boolean;

}

export interface PDFInput {

filename: string;

base64Data: string; // Base64 encoded PDF

}

export class PRDAgent {

async processMessage(

userMessage: string,

conversationHistory: OpenRouterMessage[] = [],

pdfInput?: PDFInput

): Promise<PRDAgentResponse> {

// Build conversation context with optional PDF content

const messages: OpenRouterMessage[] = [

{ role: 'system', content: PRD_AGENT_PROMPT },

...conversationHistory

];

// Create user message with optional PDF attachment

if (pdfInput) {

// Use OpenRouter's native file support

messages.push({

role: 'user',

content: [

{

type: 'text',

text: userMessage

},

{

type: 'file',

file: {

filename: pdfInput.filename,

file_data: `data:application/pdf;base64,${pdfInput.base64Data}`

}

}

]

});

} else {

// Simple text message

messages.push({

role: 'user',

content: userMessage

});

}

// PDF parser plugin for OpenRouter

const plugins = pdfInput ? [

{

id: "file-parser",

pdf: {

engine: "pdf-text" // Free engine for well-structured PDFs

}

}

] : undefined;

try {

const response = await openRouter.chat('prd_agent', messages, 0.3, 2000, plugins);

// Parse JSON response

const parsedResponse = this.parseResponse(response);

return parsedResponse;

} catch (error) {

console.error('PRD Agent error:', error);

// Fallback response

return {

output: "I apologize, but I'm experiencing technical difficulties. Could you please try again?",

is_prd_complete: false

};

}

}

private parseResponse(response: string): PRDAgentResponse {

try {

// Try to parse as JSON first

const parsed = JSON.parse(response);

// Validate required fields

if (typeof parsed.output === 'string' && typeof parsed.is_prd_complete === 'boolean') {

return parsed;

}

throw new Error('Invalid response format');

} catch (error) {

console.error('Failed to parse PRD agent response:', response);

// If JSON parsing fails, treat as plain text

return {

output: response,

is_prd_complete: false

};

}

}

}

// Singleton instance

export const prdAgent = new PRDAgent();Markdown Agent - supabase/functions/ai-agents/agents/markdown-agent.ts

// Markdown Agent - generates PRD markdown from conversation

import { openRouter, OpenRouterMessage } from '../utils/openrouter.ts';

import { MARKDOWN_AGENT_PROMPT } from '../utils/prompts.ts';

export interface MarkdownSection {

title: string;

sub_title: string;

content: string;

}

export interface MarkdownAgentResponse {

output: string;

sections: MarkdownSection[];

}

export class MarkdownAgent {

async generateMarkdown(

prdData: any, // The collected PRD data from conversation

conversationHistory: OpenRouterMessage[] = []

): Promise<MarkdownAgentResponse> {

// Create context for markdown generation

const contextMessage = this.buildContext(prdData, conversationHistory);

const messages: OpenRouterMessage[] = [

{ role: 'system', content: MARKDOWN_AGENT_PROMPT },

{ role: 'user', content: contextMessage }

];

try {

const response = await openRouter.chat('markdown_agent', messages, 0.1, 4000);

// Parse JSON response

const parsedResponse = this.parseResponse(response);

return parsedResponse;

} catch (error) {

console.error('Markdown Agent error:', error);

// Fallback response

return {

output: "Please continue answering the questions to generate a preview.",

sections: []

};

}

}

private buildContext(prdData: any, conversationHistory: OpenRouterMessage[]): string {

let context = "Generate a comprehensive PRD markdown based on the following information:\n\n";

// Add conversation history context

if (conversationHistory.length > 0) {

context += "## Conversation History:\n";

conversationHistory.forEach((msg, index) => {

if (msg.role === 'user' || msg.role === 'assistant') {

context += `${msg.role}: ${msg.content}\n`;

}

});

context += "\n";

}

// Add any structured PRD data

if (prdData) {

context += "## Structured PRD Data:\n";

context += JSON.stringify(prdData, null, 2);

context += "\n";

}

context += "\nPlease generate a comprehensive PRD markdown document based on this information.";

return context;

}

private parseResponse(response: string): MarkdownAgentResponse {

try {

// Try to parse as JSON first

const parsed = JSON.parse(response);

// Validate required fields

if (typeof parsed.output === 'string' && Array.isArray(parsed.sections)) {

return parsed;

}

throw new Error('Invalid response format');

} catch (error) {

console.error('Failed to parse Markdown agent response:', response);

// If JSON parsing fails, treat as plain text markdown

return {

output: response,

sections: []

};

}

}

}

// Singleton instance

export const markdownAgent = new MarkdownAgent();8.6 Implement main agent function

supabase/functions/ai-agents/types/agents.ts

export interface AgentState {

messages: Array<{

role: 'user' | 'assistant' | 'system';

content: string;

timestamp?: string;

}>;

session_id: string;

user_id: string;

prd_data?: any;

is_prd_complete: boolean;

markdown_result?: {

output: string;

sections: any[];

};

}

export interface AgentResponse {

output: string;

is_prd_complete?: boolean;

sections?: any[];

next_agent?: string;

}supabase/functions/ai-agents/index.ts

// @ts-ignore: Deno global

declare const Deno: any

import { handleCors } from '../_shared/cors.ts'

import { requireAuth } from '../_shared/auth.ts'

import { createUserClient } from '../_shared/database.ts'

import { createResponse, createErrorResponse } from '../_shared/response.ts'

import { AgentState, AgentResponse } from './types/agents.ts'

import { OpenRouterMessage } from './utils/openrouter.ts'

import { routeMessage } from './utils/message-router.ts'

import { prdAgent, PDFInput } from './agents/prd-agent.ts'

import { markdownAgent } from './agents/markdown-agent.ts'

/**

* Main AI Agents handler - Simple OpenRouter implementation

*/

async function processAgentRequest(

sessionId: string,

userMessage: string,

userId: string,

authToken: string,

pdfInput?: PDFInput

): Promise<AgentResponse> {

try {

// Get current conversation state

const state = await getAgentState(sessionId, authToken);

// Step 1: Route message - decide which agent to use

const routing = routeMessage(userMessage);

// Step 2: Execute the appropriate agent

if (routing.target_agent === 'markdown_agent') {

// Generate markdown from existing PRD data

const result = await markdownAgent.generateMarkdown(

state.prd_data,

state.messages

);

// Update state

state.markdown_result = result;

await saveAgentState(state, authToken, 'markdown_agent');

return {

output: result.output,

sections: result.sections

};

} else {

// PRD Agent - gather requirements (with optional PDF support)

const result = await prdAgent.processMessage(

userMessage,

state.messages,

pdfInput

);

// Update conversation history

state.messages.push(

{ role: 'user', content: userMessage, timestamp: new Date().toISOString() },

{ role: 'assistant', content: result.output, timestamp: new Date().toISOString() }

);

// Update state

state.is_prd_complete = result.is_prd_complete;

state.current_agent = 'prd_agent';

// If PRD is complete, store the data for markdown generation

if (result.is_prd_complete) {

state.prd_data = {

conversation_history: state.messages,

completion_timestamp: new Date().toISOString()

};

}

await saveAgentState(state, authToken, 'prd_agent');

return {

output: result.output,

is_prd_complete: result.is_prd_complete

};

}

} catch (error) {

console.error('Error processing agent request:', error);

throw error;

}

}

/**

* Get or create agent state for session

*/

async function getAgentState(sessionId: string, authToken: string): Promise<AgentState> {

const supabase = createUserClient(authToken)

try {

// Get existing session

const { data: session, error: sessionError } = await supabase

.from('session')

.select('*')

.eq('id', sessionId)

.single()

if (sessionError || !session) {

throw new Error('Session not found')

}

// Get conversation history from conversation_messages table

const { data: messages, error: messagesError } = await supabase

.from('conversation_messages')

.select('role, content, agent_type, created_at')

.eq('session_id', session.id)

.order('created_at', { ascending: true })

if (messagesError) {

console.error('Error loading conversation history:', messagesError)

// Continue with empty messages if history loading fails

}

// Convert database messages to OpenRouter format

const conversationHistory: OpenRouterMessage[] = (messages || []).map(msg => ({

role: msg.role as 'user' | 'assistant' | 'system',

content: msg.content

}))

return {

messages: conversationHistory,

session_id: sessionId,

user_id: session.user_id,

is_prd_complete: false

}

} catch (error) {

console.error('Error getting agent state:', error)

throw new Error('Failed to get session state')

}

}

/**

* Save agent state to database

*/

async function saveAgentState(state: AgentState, authToken: string, currentAgent?: string): Promise<void> {

const supabase = createUserClient(authToken)

try {

// Update session timestamp

const { error: updateError } = await supabase

.from('session')

.update({

updated_at: new Date().toISOString()

})

.eq('id', state.session_id)

if (updateError) {

console.error('Error updating session timestamp:', updateError)

}

// Save new messages to conversation_messages table

// Only save messages that aren't already in the database

if (state.messages && state.messages.length > 0) {

// Get current message count to determine which messages are new

const { count: existingCount } = await supabase

.from('conversation_messages')

.select('*', { count: 'exact', head: true })

.eq('session_id', state.session_id)

const newMessages = state.messages.slice(existingCount || 0)

if (newMessages.length > 0) {

const messagesToInsert = newMessages.map(msg => ({

session_id: state.session_id,

role: msg.role,

content: msg.content,

agent_type: currentAgent || 'prd_agent'

}))

const { error: insertError } = await supabase

.from('conversation_messages')

.insert(messagesToInsert)

if (insertError) {

console.error('Error saving conversation messages:', insertError)

}

}

}

} catch (error) {

console.error('Error saving agent state:', error)

// Don't throw here - state saving failure shouldn't break the conversation

}

}

Deno.serve(async (req: Request) => {

// Handle CORS preflight requests

const corsResponse = handleCors(req)

if (corsResponse) {

return corsResponse

}

try {

// Only allow POST method

if (req.method !== 'POST') {

return createErrorResponse('Method not allowed', 405)

}

// Get authenticated user and token

const authToken = req.headers.get('Authorization')?.replace('Bearer ', '')

if (!authToken) {

return createErrorResponse('No authorization token provided', 401)

}

const user = await requireAuth(req)

// Parse request body

const body = await req.json()

const { session_id, message, pdf_file } = body

if (!session_id || !message) {

return createErrorResponse('Missing session_id or message', 400)

}

// Parse PDF input if provided

let pdfInput: PDFInput | undefined;

if (pdf_file) {

pdfInput = {

filename: pdf_file.filename || 'document.pdf',

base64Data: pdf_file.base64Data

};

}

// Process the agent request

const response = await processAgentRequest(

session_id,

message,

user.id,

authToken,

pdfInput

)

return createResponse(response)

} catch (error) {

console.error('Error in ai-agents function:', error)

// Handle auth errors (thrown as Response objects)

if (error instanceof Response) {

return error

}

// Determine appropriate error response

const errorMessage = error instanceof Error ? error.message : 'Unexpected error occurred'

return createErrorResponse(errorMessage, 500)

}

})Key agent system features:

Stateless functions: All state in PostgreSQL.

File processing: PDF support via OpenRouter plugins.

Conversation persistence: In conversation_messages table.

JSON structured responses: For integration reliability.

Fallback handling: Graceful degradation without crashes.

Step 9: Implement Session Management Function

Every conversation between a user and the AI agents needs to be tied to a session. The session acts as a container for:

User identity (via Supabase Auth)

Conversation history (linked through conversation_messages)

Generated PRD metadata (linked through prd)

By centralizing this state in a session record, the system ensures reliable context management and security isolation. Without session management, conversations would be stateless and disconnected, making features like multi-agent orchestration, auditing, or document association impossible.

This function (get-create-session) is the first entry point for authenticated users. It validates their identity, creates a new session in the database, and returns the session_id.

9.1 Explanation of get-create-session

Validates the user is authenticated (via Supabase Auth).

Creates a new session row in the session table.

Returns the session_id to the frontend, which will be required for all subsequent requests.

Uses Row-Level Security (RLS) to automatically restrict data access to the authenticated user.

9.2 Implement the function

supabase/functions/get-create-session/index.ts

// @ts-ignore: Deno global

declare const Deno: any

import { handleCors } from '../_shared/cors.ts'

import { requireAuth } from '../_shared/auth.ts'

import { createUserClient } from '../_shared/database.ts'

import { createResponse, createErrorResponse } from '../_shared/response.ts'

import { SessionData } from '../_shared/types.ts'

/**

* Create session in Supabase database

*/

async function createSession(userId: string, userEmail: string, authToken: string): Promise<SessionData> {

// Create client with user context already set

const supabase = createUserClient(authToken)

// Insert new session into database

const { data: session, error } = await supabase

.from('session')

.insert({

user_id: userId,

})

.select()

.single()

if (error) {

console.error('Database error:', error)

throw new Error('Failed to create session in database')

}

return session

}

Deno.serve(async (req) => {

// Handle CORS preflight requests

const corsResponse = handleCors(req)

if (corsResponse) {

return corsResponse

}

try {

// Only allow POST method

if (req.method !== 'POST') {

return createErrorResponse('Method not allowed', 405)

}

// Get authenticated user and token

const authToken = req.headers.get('Authorization')?.replace('Bearer ', '')

if (!authToken) {

return createErrorResponse('No authorization token provided', 401)

}

const user = await requireAuth(req)

// Create session

const session = await createSession(user.id, user.email!, authToken)

return createResponse({ session_id: session.id })

} catch (error) {

console.error('Error in create-session function:', error)

// Handle auth errors (thrown as Response objects)

if (error instanceof Response) {

return error

}

// Determine appropriate error response

const errorMessage = error instanceof Error ? error.message : 'Unexpected error occurred'

const statusCode = errorMessage.includes('AI service') ? 503 : 400

return createErrorResponse(errorMessage, statusCode)

}

})Configuration files

supabase/functions/get-create-session/deno.json: {"importMap": "../import_map.json"}

supabase/functions/get-create-session/.npmrc: npm configuration

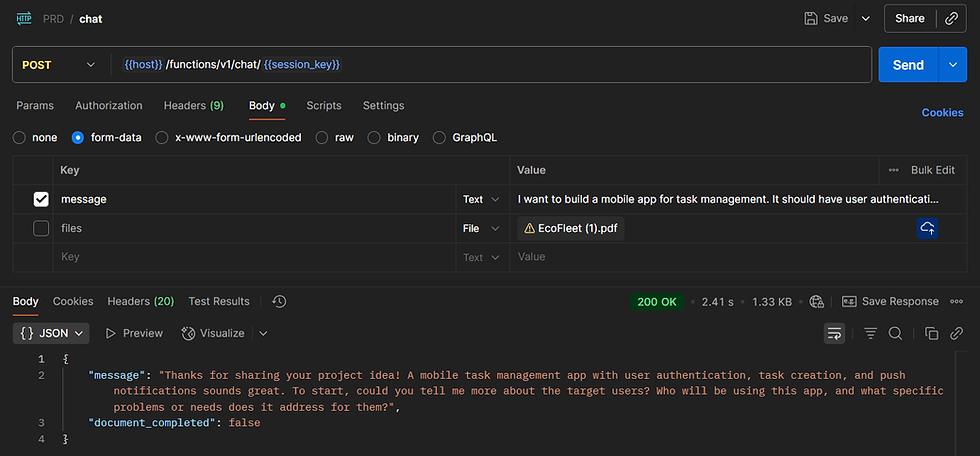

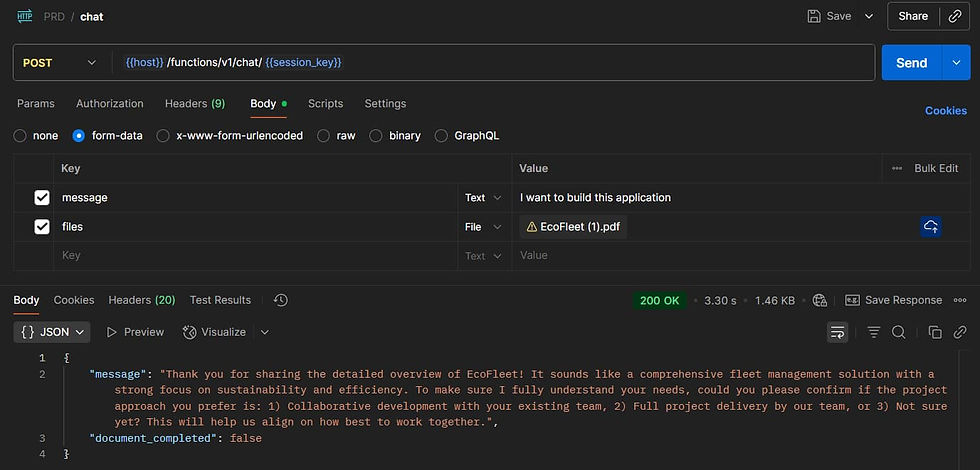

Step 10: Implement Chat Function (FormData + File Upload)

The chat function is the main user interaction point. It connects the frontend to the AI agents, allowing users to:

Ask clarifying questions about their PRD.

Upload supporting documents (PDFs) like product specs or existing drafts.

Get real-time AI responses while keeping everything tied to a secure session.

Without this step, users would have no way to provide context-rich input (text + files), which is essential for generating accurate PRDs. This function also enforces constraints (20MB file size, PDF-only) and validates that each request belongs to the correct session, preventing unauthorized access.

10.1 Explanation of chat function

The chat function is a frontend-optimized interface that:

Accepts FormData with message + PDF files.

Processes PDF files to base64 automatically.

Redirects internally to ai-agents function.

Validates session permissions via RLS.

Includes file validation (20MB max, PDF header check).

10.2 Key technical characteristics

File Processing Pipeline:

FormData parsing: Extracts message + files array.

PDF conversion: ArrayBuffer → base64 (no external libs).

Size validation: 20MB configured limit.

Type validation: Only accepts application/pdf

Internal API Call:

Self-invocation: Calls ai-agents via fetch().

Token forwarding: Passes Authorization header.

Error propagation: Handles agent errors gracefully.

10.3 Implement the function

supabase/functions/chat/index.ts

// @ts-ignore: Deno global

declare const Deno: any

import { handleCors } from '../_shared/cors.ts'

import { requireAuth } from '../_shared/auth.ts'

import { createUserClient } from '../_shared/database.ts'

import { createResponse, createErrorResponse } from '../_shared/response.ts'

import { AIServiceResponse } from '../_shared/types.ts'

const MAX_FILE_SIZE = 20 * 1024 * 1024 // 20MB

/**

* Convert File objects to PDFInput format for OpenRouter

*/

async function convertFilesToPDFInput(files: File[]): Promise<{ filename: string; base64Data: string } | undefined> {

// Only process the first PDF file for now

const pdfFile = files.find(file => file.type === 'application/pdf');

if (!pdfFile) {

return undefined;

}

try {

// Convert first PDF file to base64

const arrayBuffer = await pdfFile.arrayBuffer();

const uint8Array = new Uint8Array(arrayBuffer);

// Convert to base64

let binary = '';

for (let i = 0; i < uint8Array.length; i++) {

binary += String.fromCharCode(uint8Array[i]);

}

const base64Data = btoa(binary);

return {

filename: pdfFile.name,

base64Data

};

} catch (error) {

console.error('Error converting PDF to base64:', error);

return undefined;

}

}

/**

* Send request to OpenRouter AI agents

*/

async function sendRequestToOpenRouter(

sessionId: string,

userInput: string,

authToken: string,

files?: File[]

): Promise<AIServiceResponse> {

try {

// Convert files to PDF input if needed

const pdfInput = files && files.length > 0 ? await convertFilesToPDFInput(files) : undefined;

// Prepare request body

const requestBody: any = {

session_id: sessionId,

message: userInput

};

// Add PDF file if present

if (pdfInput) {

requestBody.pdf_file = {

filename: pdfInput.filename,

base64Data: pdfInput.base64Data

};

}

// Call our new ai-agents function

const baseUrl = Deno.env.get('SUPABASE_URL') || 'http://localhost:54321';

const response = await fetch(`${baseUrl}/functions/v1/ai-agents`, {

method: 'POST',

headers: {

'Authorization': `Bearer ${authToken}`,

'Content-Type': 'application/json'

},

body: JSON.stringify(requestBody)

});

if (!response.ok) {

const errorText = await response.text();

throw new Error(`AI agents error: ${response.status} - ${errorText}`);

}

const data = await response.json();

// Return in expected format

return {

output: data.output,

is_prd_complete: data.is_prd_complete || false,

sections: data.sections

};

} catch (error) {

console.error('Error sending request to OpenRouter agents:', error);

if (error instanceof Error) {

throw error;

}

throw new Error('An error has occurred in the AI service.');

}

}

/**

* Process user message and files through OpenRouter AI agents

*/

async function sendMessage(

sessionId: string,

userInput: string,

authToken: string,

files?: File[]

): Promise<AIServiceResponse> {

return await sendRequestToOpenRouter(sessionId, userInput, authToken, files);

}

/**

* Validate message input

*/

function validateMessage(message: string): string {

if (!message || !message.trim()) {

throw new Error('Message cannot be empty')

}

if (message.length > 10000) {

throw new Error('Message exceeds the maximum length of 10000 characters')

}

return message.trim()

}

/**

* Validate uploaded files

*/

function validateFiles(files: File[]): void {

for (const file of files) {

if (file.size > MAX_FILE_SIZE) {

throw new Error(`File '${file.name}' exceeds the maximum size limit of 20MB`)

}

}

}

Deno.serve(async (req) => {

// Handle CORS preflight requests

const corsResponse = handleCors(req)

if (corsResponse) {

return corsResponse

}

try {

// Only allow POST method

if (req.method !== 'POST') {

return createErrorResponse('Method not allowed', 405)

}

// Extract session_id from URL path

const url = new URL(req.url)

const pathParts = url.pathname.split('/')

const sessionId = pathParts[pathParts.length - 1]

if (!sessionId) {

return createErrorResponse('Session ID is required', 400)

}

// Get authenticated user and token

const authToken = req.headers.get('Authorization')?.replace('Bearer ', '')

if (!authToken) {

return createErrorResponse('No authorization token provided', 401)

}

const user = await requireAuth(req)

// Create Supabase client with user context

const supabaseClient = createUserClient(authToken)

// Parse form data for message and files

const formData = await req.formData()

const message = formData.get('message') as string | null

const files: File[] = []

// Extract all files from form data

const fileEntries = formData.getAll('files')

for (const entry of fileEntries) {

if (entry instanceof File) {

files.push(entry)

}

}

// Validate input data - message is optional but if provided must be valid

let validatedMessage = ''

if (message !== null && message !== undefined) {

try {

validatedMessage = validateMessage(message)

} catch (error) {

return createErrorResponse(

error instanceof Error ? error.message : 'Invalid message',

400

)

}

}

if (files.length > 0) {

try {

validateFiles(files)

} catch (error) {

return createErrorResponse(

error instanceof Error ? error.message : 'Invalid files',

400

)

}

}

// Check that at least message or files are provided

if (!validatedMessage && files.length === 0) {

return createErrorResponse('At least one of message or files must be provided', 400)

}

// Verify session exists and belongs to user

const { data: session, error: sessionError } = await supabaseClient

.from('session')

.select('*')

.eq('user_id', user.id)

.eq('id', sessionId)

.single()

if (sessionError || !session) {

return createErrorResponse('Session not found', 404)

}

// Send message to OpenRouter AI agents

const llmResponse = await sendMessage(

sessionId,

validatedMessage,

authToken,

files.length > 0 ? files : undefined

)

const responseData = {

message: llmResponse.output,

document_completed: llmResponse.is_prd_complete || false,

}

return createResponse(responseData)

} catch (error) {

console.error('Error in chat function:', error)

// Handle auth errors (thrown as Response objects)

if (error instanceof Response) {

return error

}

// Determine appropriate error response based on error type

const errorMessage = error instanceof Error ? error.message : 'Unexpected error occurred'

let statusCode = 500

if (errorMessage.includes('LLM service') || errorMessage.includes('AI service')) {

statusCode = 503

} else if (errorMessage.includes('Bad parameters') || errorMessage.includes('Invalid')) {

statusCode = 400

}

return createErrorResponse(errorMessage, statusCode)

}

})Key Implementation Details:

MAX_FILE_SIZE: 20MB constant (20 1024 1024)

PDF Detection: Looks for file.type === 'application/pdf'

Base64 Encoding: Manual loop without external libs

Session Security: Automatic user context validation

Real-time Integration: Optimized for live preview workflow

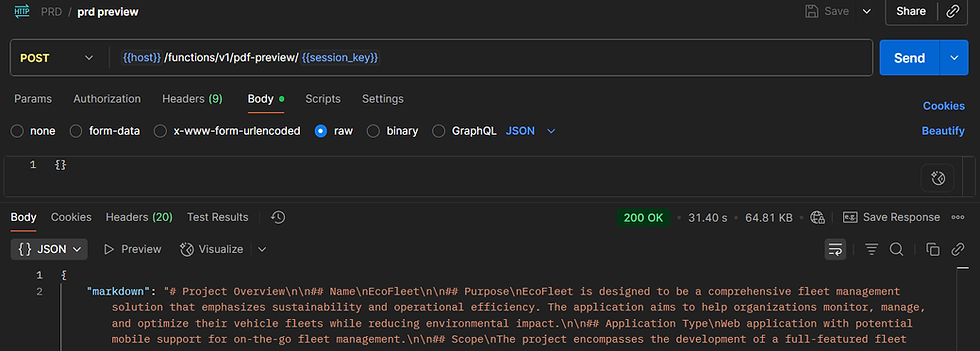

Step 11: Implement PDF Preview Function (jsPDF Generation)

11.1 Explanation of pdf-preview

This function is the document generation engine:

Sends .generate_markdown command to agents.

Converts markdown to HTML via marked library.

Applies professional CSS styling.

Generates PDF with jsPDF (browser-based, no server overhead).

Includes logo from Supabase Storage.

11.2 Why jsPDF vs Puppeteer?

Performance Benefits:

Speed: 1-2 seconds vs 8-15 seconds.

Memory: Minimal footprint vs browser overhead.

Cold starts: No browser launching needed.

Serverless friendly: Compatible with edge functions.

Technical Approach:

HTML processing: marked.js markdown → HTML.

CSS styling: Embedded styles for professional look.

PDF generation: jsPDF client-side rendering.

Logo integration: Fetch + base64 embedding.

11.3 Implement the function

supabase/functions/pdf-preview/index.ts

// @ts-ignore: Deno global

declare const Deno: any

import { handleCors } from '../_shared/cors.ts'

import { requireAuth } from '../_shared/auth.ts'

import { createUserClient } from '../_shared/database.ts'

import { createResponse, createErrorResponse } from '../_shared/response.ts'

import { PdfPreviewResponse, AIServiceResponse } from '../_shared/types.ts'

/**

* Send .generate_markdown command to OpenRouter AI agents

*/

async function sendMarkdownGenerationRequest(

sessionId: string,

authToken: string

): Promise<AIServiceResponse> {

try {

// Call our new ai-agents function with .generate_markdown command

const baseUrl = Deno.env.get('SUPABASE_URL') || 'http://localhost:54321';

const response = await fetch(`${baseUrl}/functions/v1/ai-agents`, {

method: 'POST',

headers: {

'Authorization': `Bearer ${authToken}`,

'Content-Type': 'application/json'

},

body: JSON.stringify({

session_id: sessionId,

message: '.generate_markdown'

})

});

if (!response.ok) {

const errorText = await response.text();

throw new Error(`AI agents error: ${response.status} - ${errorText}`);

}

const data = await response.json();

// Return in expected format

return {

output: data.output,

sections: data.sections || []

};

} catch (error) {

console.error('Error sending markdown generation request:', error);

if (error instanceof Error) {

throw error;

}

throw new Error('An error has occurred in the AI service.');

}

}

/**

* Convert markdown to PDF with styling and return as base64

*

* Uses jsPDF to generate real PDF documents from markdown content.

* Includes proper formatting, headers, and styling.

*/

async function markdownToPdfBase64(markdownText: string): Promise<string> {

try {

// Import required libraries for markdown conversion

// @ts-ignore: Dynamic import

const { marked } = await import('https://esm.sh/marked@9.1.6')

// Convert markdown to HTML

const htmlContent = await marked(markdownText)

// Get logo as base64 data URI

const logoDataUri = await getLogoDataUri()

// Create styled HTML document (same as Django implementation)

const styledHtml = `

<!DOCTYPE html>

<html>

<head>

<meta charset="UTF-8">

<style>

@page {

margin: 2.5cm 1cm 2cm 1cm;

@bottom-left {

content: element(footer);

}

}

#footer {

position: running(footer);

font-style: italic;

font-size: 10px;

color: #808080;

}

#footer a {

color: #808080;

text-decoration: none;

}

body {

font-family: 'Arial', sans-serif;

}

h1, h2, h3, h4, h5, h6 {

font-weight: bold;

margin: 0;

padding: 0.5em 0;

}

h1 { font-size: 2em; }

h2 { font-size: 1.75em; }

h3 { font-size: 1.5em; }

h4 { font-size: 1.25em; }

h5 { font-size: 1.1em; }

h6 { font-size: 1em; }

p {

white-space: break-spaces;

}

p:not(:first-child, :last-child) {

margin: 0.5em 0;

}

p:first-child {

margin-bottom: 0.5em;

}

p:last-child {

margin-top: 0.5em;

}

ol, ul {

margin: 0.5em 0;

padding-left: 20px;

}

ul { list-style-type: disc; }

ol { list-style-type: decimal; }

strong, b { font-weight: bold; }

em, i { font-style: italic; }

table {

box-sizing: border-box;

background-color: transparent;

border-collapse: collapse;

border-spacing: 0;

max-width: 100%;

margin-bottom: 20px;

border: 1px solid #ddd;

width: auto;

}

thead, tbody {

border-collapse: collapse;

border-spacing: 0;

box-sizing: border-box;

}

table thead th {

text-align: left;

padding: 8px;

line-height: 1.5;

vertical-align: bottom;

border: 1px solid #ddd;

border-bottom-width: 2px;

border-top: 0;

}

table tbody td {

padding: 8px;

line-height: 1.5;

vertical-align: top;

border: 1px solid #ddd;

}

table tbody tr:nth-child(odd) td {

background-color: #f9f9f9;

}

</style>

</head>

<body>

<div id="footer">

<a href="https://prdagent.leanware.co/">This PRD was generated using the free Leanware PRD Agent - prdagent.leanware.co</a>

</div>

<div id="content">

${htmlContent}

</div>

</body>

</html>

`

// Generate PDF using jsPDF

try {

return await generateSimplePdfWithJsPDF(styledHtml, logoDataUri)

} catch (error) {

console.warn('PDF generation failed, falling back to HTML:', error)

const htmlBase64 = btoa(unescape(encodeURIComponent(styledHtml)))

return htmlBase64

}

} catch (error) {

console.error('Error generating PDF:', error)

throw new Error('Failed to generate PDF from markdown')

}

}

/**

* Simple PDF generation with jsPDF (improved HTML parsing)

*/

async function generateSimplePdfWithJsPDF(htmlContent: string, logoDataUri?: string): Promise<string> {

try {

// @ts-ignore: Dynamic import

const { jsPDF } = await import('https://esm.sh/jspdf@2.5.1')

const doc = new jsPDF({

orientation: 'portrait',

unit: 'mm',

format: 'a4'

})

const pageWidth = doc.internal.pageSize.getWidth()

const pageHeight = doc.internal.pageSize.getHeight()

const margin = 20

const maxWidth = pageWidth - (margin * 2)

let yPosition = margin

// Add logo if provided

if (logoDataUri) {

try {

const logoBase64 = logoDataUri.split(',')[1] // Remove data:image/png;base64, prefix

doc.addImage(logoBase64, 'PNG', pageWidth - 60, 10, 50, 13.75) // Top right corner

} catch (logoError) {

console.warn('[WARN] Failed to add logo to PDF:', logoError)

}

}

// Extract content with basic structure preservation

const contentDiv = htmlContent.match(/<div id="content">(.*?)<\/div>/s)?.[1] || htmlContent

// Process headers and paragraphs separately

const elements = contentDiv

.replace(/<h1[^>]*>(.*?)<\/h1>/gi, '\n\n### H1: $1 ###\n')

.replace(/<h2[^>]*>(.*?)<\/h2>/gi, '\n\n## H2: $1 ##\n')

.replace(/<h3[^>]*>(.*?)<\/h3>/gi, '\n\n# H3: $1 #\n')

.replace(/<p[^>]*>(.*?)<\/p>/gi, '\n$1\n')

.replace(/<br\s*\/?>/gi, '\n')

.replace(/<li[^>]*>(.*?)<\/li>/gi, '• $1\n')

.replace(/<[^>]*>/g, '') // Remove remaining HTML tags

.replace(/ /g, ' ')

.replace(/&/g, '&')

.replace(/</g, '<')

.replace(/>/g, '>')

.split('\n')

.filter(line => line.trim())

// Add title

doc.setFontSize(16)

doc.setFont(undefined, 'bold')

doc.text('Project Requirements Document', margin, yPosition)

yPosition += 15

// Process each element

for (const element of elements) {

const trimmed = element.trim()

if (!trimmed) continue

// Check if we need a new page

if (yPosition > pageHeight - 30) {

doc.addPage()

yPosition = margin

}

// Handle headers

if (trimmed.startsWith('### H1:') && trimmed.endsWith(' ###')) {

doc.setFontSize(14)

doc.setFont(undefined, 'bold')

const headerText = trimmed.replace(/^### H1: /, '').replace(/ ###$/, '')

const lines = doc.splitTextToSize(headerText, maxWidth)

doc.text(lines, margin, yPosition)

yPosition += lines.length * 8 + 5

} else if (trimmed.startsWith('## H2:') && trimmed.endsWith(' ##')) {

doc.setFontSize(12)

doc.setFont(undefined, 'bold')

const headerText = trimmed.replace(/^## H2: /, '').replace(/ ##$/, '')

const lines = doc.splitTextToSize(headerText, maxWidth)

doc.text(lines, margin, yPosition)

yPosition += lines.length * 7 + 4

} else if (trimmed.startsWith('# H3:') && trimmed.endsWith(' #')) {

doc.setFontSize(11)

doc.setFont(undefined, 'bold')

const headerText = trimmed.replace(/^# H3: /, '').replace(/ #$/, '')

const lines = doc.splitTextToSize(headerText, maxWidth)

doc.text(lines, margin, yPosition)

yPosition += lines.length * 6 + 3

} else {

// Regular text

doc.setFontSize(10)

doc.setFont(undefined, 'normal')

const lines = doc.splitTextToSize(trimmed, maxWidth)

doc.text(lines, margin, yPosition)

yPosition += lines.length * 5 + 3

}

}

const pdfBase64 = doc.output('datauristring').split(',')[1]

return pdfBase64

} catch (error) {

console.error('jsPDF error:', error)

throw error

}

}

/**

* Get logo as base64 data URI from storage URL

*/

async function getLogoDataUri(): Promise<string> {

try {

const logoUrl = Deno.env.get('LOGO_URL')

if (!logoUrl) {

console.warn('LOGO_URL not configured, using placeholder')

// Fallback to placeholder SVG

const placeholderSvg = `<svg width="200" height="55" xmlns="http://www.w3.org/2000/svg">

<rect width="200" height="55" fill="#4F46E5"/>

<text x="100" y="30" text-anchor="middle" fill="white" font-family="Arial" font-size="14">Leanware</text>

</svg>`

const base64Svg = btoa(placeholderSvg)

return `data:image/svg+xml;base64,${base64Svg}`

}

// Download logo from bucket

const response = await fetch(logoUrl)

if (!response.ok) {

throw new Error(`Failed to fetch logo: ${response.status}`)

}

const logoBuffer = await response.arrayBuffer()

const uint8Array = new Uint8Array(logoBuffer)

// Convert to base64

let binary = ''

for (let i = 0; i < uint8Array.length; i++) {

binary += String.fromCharCode(uint8Array[i])

}

const base64Logo = btoa(binary)

// Detect content type from response headers

const contentType = response.headers.get('content-type') || 'image/png'

return `data:${contentType};base64,${base64Logo}`

} catch (error) {

console.error('Error loading logo from storage:', error)

// Fallback to placeholder

const placeholderSvg = `<svg width="200" height="55" xmlns="http://www.w3.org/2000/svg">

<rect width="200" height="55" fill="#4F46E5"/>

<text x="100" y="30" text-anchor="middle" fill="white" font-family="Arial" font-size="14">Leanware</text>

</svg>`

const base64Svg = btoa(placeholderSvg)

return `data:image/svg+xml;base64,${base64Svg}`

}

}

Deno.serve(async (req) => {

// Handle CORS preflight requests

const corsResponse = handleCors(req)

if (corsResponse) {

return corsResponse

}

try {

// Only allow POST method

if (req.method !== 'POST') {

return createErrorResponse('Method not allowed', 405)

}

// Extract session_id from URL path

const url = new URL(req.url)

const pathParts = url.pathname.split('/')

const sessionId = pathParts[pathParts.length - 1]

if (!sessionId) {

return createErrorResponse('Session ID is required', 400)

}

// Get authenticated user and token

const authToken = req.headers.get('Authorization')?.replace('Bearer ', '')

if (!authToken) {

return createErrorResponse('No authorization token provided', 401)

}

const user = await requireAuth(req)

// Create Supabase client with user context

const supabaseClient = createUserClient(authToken)

// Verify session exists and belongs to user

const { data: session, error: sessionError } = await supabaseClient

.from('session')

.select('*')

.eq('user_id', user.id)

.eq('id', sessionId)

.single()

if (sessionError || !session) {

return createErrorResponse('Session not found', 404)

}

// Send .generate_markdown command to OpenRouter AI agents

const aiResponse = await sendMarkdownGenerationRequest(sessionId, authToken)

// Generate PDF from markdown

const pdfBase64 = await markdownToPdfBase64(aiResponse.output)

const responseData: PdfPreviewResponse = {

markdown: aiResponse.output,

pdf_base64: pdfBase64,

sections: aiResponse.sections || []

}

return createResponse(responseData)

} catch (error) {

console.error('Error in pdf-preview function:', error)

// Handle auth errors (thrown as Response objects)