What Are Evals in AI? A Complete Guide to AI Evaluations

- Leanware Editorial Team

- Dec 18, 2025

- 10 min read

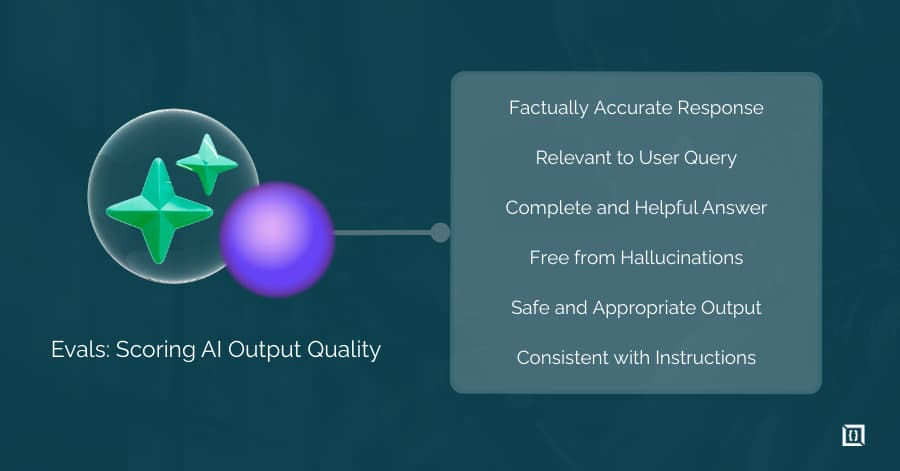

Traditional software has unit tests. You write assertions, run them, and know immediately if something broke. LLMs don't work that way. Outputs are non-deterministic, "correct" is often subjective, and the same prompt can produce different results each time. Evals solve this by giving you a systematic framework to measure model behavior across dimensions that matter: accuracy, relevance, safety, and task completion.

Building evals is now a core engineering skill for any team shipping LLM features. Without them, you're guessing whether prompt changes help or hurt, whether model updates introduce regressions, and whether your system handles edge cases.

This guide covers the evaluation methods that work, the pitfalls that waste time, and how to build an eval pipeline you can actually rely on.

What Does Eval Mean in AI?

Eval is short for evaluation. In AI and machine learning, evals measure how well a model performs on specific tasks or criteria. For LLMs, this typically means assessing output quality across dimensions like relevance, factual accuracy, coherence, helpfulness, and safety.

A simple example: you have a customer support chatbot. An eval might check whether the bot's responses actually answer the user's question, whether it hallucinates information not in the knowledge base, and whether it maintains appropriate tone. You run hundreds or thousands of test cases through the system and score the outputs against these criteria.

Evals apply to different stages. You might eval a base model to decide which one to use. You might eval prompt variations to optimize performance. You might eval production outputs to catch regressions. The core idea stays the same: systematic measurement of model behavior.

Difference Between Evals and Testing

Traditional software testing verifies that code behaves as expected. Unit tests check specific functions, while integration tests ensure components work together. Behavior is deterministic and well-defined.

Evals, by contrast, measure the quality of AI outputs, which are non-deterministic. The same prompt can produce different results, and “correct” often depends on context:

A response may be factually accurate but not useful.

A response may be helpful but slightly off-topic.

Aspect | Testing | Evals |

Goal | Verify code correctness | Measure output quality and performance |

Output | Pass/fail | Scores, rankings, distributions |

Behavior | Deterministic | Probabilistic, context-dependent |

Focus | Bugs or errors | Quality, edge cases, behavioral drift |

Assessment | Well-defined | Task-specific, sometimes subjective |

Both are necessary: testing ensures the system works reliably, and evals ensure the AI performs appropriately.

Importance of Evals in AI Development

Evals play a key role in AI development for several reasons:

Model selection: They provide objective data to compare models or fine-tuned variants, replacing guesswork.

Regression detection: Updates to models, prompts, or infrastructure can degrade quality. Evals catch these issues early.

Identifying failure modes: Evals highlight specific weaknesses, such as struggles with nuanced queries or certain input types.

Supporting iteration: Performance measurement allows systematic improvement through model comparisons, prompt testing, and tracking changes over time.

Types of AI Evaluation Methods

Reference-Based vs Reference-Free Metrics

Reference-based metrics compare model output to a known correct answer. BLEU and ROUGE scores work this way, measuring overlap between generated text and reference text. These metrics suit tasks with clear right answers: translation, summarization of specific content, or extracting structured data.

Reference-free metrics assess output quality without a gold standard. Human ratings fall here: judges score responses on a scale without comparing to a reference. LLM-as-judge approaches also work reference-free, using another model to evaluate outputs based on criteria you define.

Reference-based metrics are cheaper and more consistent but only work when correct answers exist. Reference-free metrics handle open-ended tasks but introduce subjectivity and cost.

Direct Grading, Pairwise Comparison, and Ranking

Direct grading asks evaluators to score outputs independently. Rate this response 1 to 5 on helpfulness. This approach scales well and produces absolute scores, but calibration varies across evaluators. One person's 4 is another's 3.

Pairwise comparison shows two outputs and asks which is better. This eliminates calibration issues since evaluators only make relative judgments. It's particularly useful for A/B testing prompt variants or comparing models. The downside: you need many comparisons to rank multiple options, and you don't get absolute quality scores.

Ranking extends pairwise to multiple options. Given five responses, order them best to worst. This provides richer signal than pairwise but takes more evaluator effort.

Simulation and Scenario Testing

Some capabilities require testing in context, not just scoring isolated outputs. Simulation-based evals put models in scenarios and measure outcomes. Can this agent complete a multi-step task? Does it recover from errors? How does it handle adversarial inputs?

This approach matters for AI agents, tool-use scenarios, and complex workflows. You create test environments, define success criteria, and run the model through scenarios. Evaluation becomes measuring task completion and behavioral patterns rather than scoring individual responses.

Common Challenges in AI Evaluation

Evaluating AI models comes with challenges on multiple fronts. Technical, operational, and ethical issues all need attention. Here’s a quick overview:

Challenge | Description |

Technical | |

Data Quality | Poor or biased datasets lead to flawed evaluations. |

Benchmark Contamination | Training-test overlap inflates scores. |

Model Generalization | Works on benchmarks but may fail in real-world use. |

Non-Deterministic Outputs | Same prompt can give varied responses. |

Operational & Measurement | |

Defining "Good" | Subjective qualities are hard to measure. |

Scalability | Human evaluation is slow; automated metrics miss nuances. |

Standardization | Inconsistent metrics hinder comparisons. |

Incentives | Leaderboard focus or avoiding safety tests can skew results. |

Ethical & Security | |

Bias & Fairness | Models can amplify biases. |

Explainability | Black-box models are hard to interpret. |

Security | Vulnerable to attacks or misuse. |

Regulation | Rules require risk assessment; methods are still developing. |

The Three Gulfs in AI Evaluation

A useful framework identifies three gaps where evals can go wrong.

Gulf 1: Comprehension (Developer to Data)

This gulf exists between what developers think users need and what the evaluation data actually captures. You might build evals around your assumptions about use cases while missing how real users actually interact with the system. The eval passes, but users still have problems.

Fix this by using real user queries in your eval sets, not just synthetic examples you invented.

Gulf 2: Specification (Developer to LLM Pipeline)

This gulf appears between what you want the model to do and how you've actually configured it. Your prompts, system instructions, and parameters might not fully capture your intent. The eval measures something, but not quite the right thing.

Fix this by validating that your eval criteria match actual user needs, and that your prompts align with those criteria.

Gulf 3: Generalization (Data to LLM Pipeline)

This gulf emerges when evals perform well on test data but the model fails on real-world inputs. Your eval set might be too clean, too narrow, or unrepresentative. The model overfits to the eval distribution.

Fix this by using diverse, messy, realistic data and regularly refreshing eval sets with new production examples.

The Hidden Pitfalls of Evals

Several problems undermine eval reliability. Metric gaming happens when you optimize for the eval metric rather than actual quality. A model might learn to produce outputs that score well on BLEU without being genuinely good.

Over-optimization leads to similar issues. If you tune heavily against a fixed eval set, you overfit to those specific examples. Performance on the eval improves while real-world performance stagnates or degrades.

Evaluator bias affects human and LLM-based judgments. Human raters have preferences and inconsistencies. LLM judges have their own biases and can favor outputs similar to their own style.

Data contamination occurs when test examples leak into training data. The model memorizes answers rather than demonstrating genuine capability.

How to Design Effective AI Evals

Step 1: Define What Matters

Start by identifying what success looks like for your specific application. A customer support bot needs accuracy and helpfulness. A creative writing assistant needs originality and engagement. A code generation tool needs correctness and efficiency.

Translate these goals into measurable criteria. Avoid vague qualities like "good" or "smart." Define specific dimensions: factual accuracy, response completeness, appropriate tone, absence of hallucination, task completion rate.

Step 2: Gather Real, Messy Data

Synthetic eval data misses edge cases that real users generate. Pull examples from production logs, customer support tickets, user feedback, and actual queries. Include the weird stuff: typos, ambiguous requests, multi-part questions, and adversarial inputs.

Aim for diversity across use cases, user types, and difficulty levels. Your eval set should represent the full distribution of inputs your model will encounter.

Step 3: Build Targeted Evaluators

Choose evaluation methods matching your criteria. For factual accuracy, you might use reference-based checks against a knowledge base. For tone and helpfulness, human raters or LLM judges work better. For task completion, simulation-based testing makes sense.

Build evaluation pipelines that run automatically. Manual spot-checking doesn't scale. You need evals that run on every deployment, every prompt change, every model update.

Step 4: Analyze, Iterate, and Improve

Evals produce data. Use it. Look for patterns in failures. Which categories of inputs cause problems? Where does performance degrade? What do low-scoring outputs have in common?

Use insights to improve prompts, add guardrails, fine-tune models, or update system design. Then re-eval to verify improvements. This feedback loop drives actual quality gains.

Step 5: Share and Act

Eval results should inform decisions across teams. Build dashboards showing performance trends. Alert on regressions. Share findings with product, engineering, and leadership.

Make eval results actionable. If accuracy drops below a threshold, block deployment. If a specific failure mode emerges, prioritize fixing it. Evals only matter if they influence what you build.

Best Practices for LLM Evaluation

LLM evaluation works best when you go beyond a single metric. Automate what you can with small evaluator models or benchmarks, and reserve human review for nuances like creativity, tone, or safety. Use real-world edge cases to check generalization, and keep versioning and metrics aligned with business goals to make the process clear and practical.

Prompting Fundamentals and Outsourcing

Prompt quality directly affects eval reliability. Vague or ambiguous evaluation prompts produce inconsistent scores. When using LLM-as-judge, invest in clear, specific evaluation instructions with examples of good and bad outputs.

Consider third-party eval services for human evaluation at scale. Platforms like Scale AI, Surge AI, or specialized services provide trained raters and quality control. This costs more than internal evaluation but produces more consistent results.

Application-Centric vs Foundation Model Evals

Evaluating a foundation model differs from evaluating your specific application. Foundation model evals test general capabilities across diverse benchmarks.

Application evals test performance on your actual use case with your actual users.

Don't assume strong benchmark performance means your application works well. A model might excel at general reasoning but fail on your domain-specific tasks. Build evals specific to your application.

Custom Metrics and Human-in-the-Loop Approaches

Generic metrics rarely capture everything that matters. Build custom evaluation criteria for your domain. A legal document assistant needs different metrics than a recipe generator.

Combine automated and human evaluation. Automated checks catch obvious issues at scale. Human review provides nuanced judgment on edge cases and subjective quality. Use automation for coverage and humans for depth.

You can also connect with us to explore your project, get expert guidance, and start building solutions that meet your goals efficiently.

Frequently Asked Questions

What are evals in machine learning?

Evals are systematic assessments that measure how well a model performs on specific tasks and criteria. In traditional ML, evals typically measure metrics like accuracy, precision, recall, and F1 score against held-out test sets. For LLMs, evals expand to cover output quality dimensions like relevance, factual accuracy, coherence, helpfulness, and safety. Teams run evals at multiple stages: during development to compare models and tune prompts, before deployment to verify production readiness, and continuously in production to catch regressions and monitor drift.

What does eval stand for?

Eval is shorthand for evaluation. The term became standard in ML research and has carried over into applied AI engineering. You'll see it used as both noun ("run the evals") and verb ("eval the new prompt variant"). In LLM contexts, evals specifically refer to measuring model output quality rather than traditional metrics like perplexity or loss.

What is the difference between evals and testing?

Testing verifies deterministic code behavior. You write a unit test asserting that function X returns output Y for input Z. It passes or fails. The expected behavior is fixed and well-defined. Evals handle non-deterministic systems where the same input can produce different outputs, and where "correct" is often subjective or context-dependent.

Evals produce scores, distributions, and comparative rankings rather than binary pass/fail. Testing catches bugs in your code. Evals catch quality problems, behavioral drift, and edge cases in model outputs. Production AI systems need both: tests for infrastructure reliability, evals for output quality.

How much does it cost to implement evals for a production LLM application?

Costs break into three categories. Automated evals using LLM-as-judge approaches cost $0.01 to $0.10 per evaluation depending on the judge model (GPT-4 costs more than GPT-3.5) and prompt length. Running 1,000 evals daily adds up to $300 to $3,000 monthly. Human evaluation costs $0.50 to $5+ per judgment depending on task complexity, required expertise, and whether you use internal reviewers or platforms like Scale AI.

Engineering time is the hidden cost: building a basic pipeline takes 1 to 4 weeks, and maintaining eval sets requires ongoing investment as your product evolves.

What tools should I use for LLM evals?

LangSmith integrates tightly with LangChain, offering tracing, evaluation runs, and dataset management in one platform. Braintrust focuses on eval management with strong collaboration features and supports teams running experiments across prompt variants. Phoenix from Arize emphasizes observability alongside evaluation, useful if you want monitoring and evals in one tool. OpenAI Evals provides an open-source framework for building custom evaluations with good documentation.

Promptfoo works well for prompt testing and comparison. For teams with simpler needs, custom scripts using pandas and basic LLM API calls often suffice initially. Choose based on your existing stack, team size, and whether you need collaboration features.

How do I fix evals that give inconsistent results?

Inconsistent evals usually stem from a few root causes. Small dataset size amplifies variance, so increase to 500+ examples for stable metrics. Ambiguous evaluation criteria confuse both human and LLM judges, so add explicit rubrics with examples of scores 1, 3, and 5. High temperature in LLM judges introduces randomness, so set temperature to 0 or 0.1. Evaluator disagreement is normal, so use multiple judges and average scores or take majority vote. If specific eval categories show high variance, break them into more granular criteria. Track inter-rater reliability metrics to identify where disagreement concentrates, then refine those criteria specifically.

How long does it take to set up a basic eval pipeline?

A minimal working pipeline takes 2 to 5 days: define criteria, build a test dataset of 100 to 200 examples, write evaluation prompts or scripts, and run your first batch. A production-quality system takes 2 to 4 weeks: automate runs on deployment, build dashboards for tracking trends, set up alerting on regressions, integrate with CI/CD, and establish processes for updating eval sets.

The ongoing work never stops. Eval sets need refreshing with new production examples, criteria need updating as your product evolves, and new failure modes require new test cases. Budget continuous time, not just initial setup.

What's the minimum dataset size needed for reliable evals?

It depends on what you're measuring and how much variance exists in your outputs. For directional insight on whether a change helps or hurts, 100 to 200 examples often suffice. You'll see clear trends even if the exact numbers aren't statistically robust. For confident decision-making on model comparisons or prompt changes, aim for 500+ examples. This gives you enough data to detect meaningful differences and avoid noise-driven conclusions.

Tasks with high output variance (creative writing, open-ended responses) need larger sets than constrained tasks (classification, extraction). Start with 100 to 200 examples to build your pipeline and learn what matters, then expand strategically. Focus additional examples on categories where you see high variance or frequent failures rather than adding uniformly across all cases.

.webp)